AI-Powered Cyberattacks: How Artificial Intelligence Is Changing the Threat Landscape

An AI-powered cyberattack, also known as an AI-enabled or offensive AI attack, employs AI/ML algorithms to execute malicious actions. By automating and augmenting traditional cyber tactics, these attacks become more sophisticated, narrowly targeted, and harder to detect. A worrying recent trend is the weaponization of AI, where attackers now use AI-driven tools such as ChatGPT to rapidly generate highly convincing and targeted phishing messages that previously required significant skill and resources. As AI-assisted techniques spread, they raise the risk to organizations and the security of their sensitive data.

AI cyberattacks progress in real time, changing their tactics to evade standard security measures and becoming harder to identify, in contrast to traditional threats that exhibit predictable patterns. BlackMatter ransomware, for example, uses AI-driven encryption logic combined with dynamic defensive probing to avoid endpoint detection and create maximum impact.

According to a recent survey by Darktrace, 78% of CISOs now say AI-powered cyberattacks are significantly impacting their organizations. This highlights how deeply AI is transforming the cyber threat landscape and increasing the stakes for defenders. In this blog, let us explore how attackers harness AI across every stage of a cyberattack, review real-world examples, and understand how organizations can outsmart AI cyber threats before they strike.

Key Takeaways:

- Discover what AI cyberattacks are and how they outsmart traditional defenses.

- Learn how attackers use AI for reconnaissance, phishing, malware, and evolving botnets.

- Understand common threats like deepfakes, AI ransomware, data poisoning, and multi-channel scams.

- See real-world cases and trends showing AI-driven attacks growing in sophistication.

- Explore strategies to defend with AI-powered detection, Zero Trust, and employee awareness.

What Are AI-Powered Cyberattacks?

An AI-powered cyberattack, sometimes called an AI-enabled or offensive AI attack, uses artificial intelligence and machine learning (ML) algorithms to execute malicious actions. By automating tasks and augmenting traditional cyberattack methods, these attacks become more precise, adaptive, and difficult to detect.

These attacks can take many forms, including phishing, ransomware, malware, or AI-driven social engineering. Their key danger lies in adaptability: AI allows the attack to analyze data, refine its approach, and adjust in real time to bypass defenses.

Types of AI-Driven Cyberattacks:

- Voice Impersonation Scams: Attackers use AI to mimic a CEO’s or executive’s voice, convincingly social engineer employees and trick them into making urgent wire transfers or approving other financial transactions without suspecting fraud.

- Automated Phishing Campaigns: AI generates highly realistic and personalized phishing emails in seconds, increasing the likelihood that targets will click on malicious links.

- Intelligent Vulnerability Scanning: Machine learning algorithms identify software weaknesses, helping attackers find entry points and evade traditional intrusion detection systems.

- AI Chat Scams: Chatbots powered by AI engage victims in casual conversations to extract sensitive personal details, login credentials, or other confidential information.

As AI continues to evolve, cybercriminals are developing more sophisticated and adaptive tactics, making it critical for organizations to deploy advanced security measures that can detect and counter AI-powered threats effectively.

Key capabilities attackers gain from AI

Attackers are using AI across every phase of an attack to move faster, scale operations, and stay ahead of defenses. Below are the core capabilities that make AI such a force multiplier for adversaries, followed by the main attack types enabled by those capabilities.

- Automated Attack Execution: Tasks that once needed hands-on keyboard are now automated. AI and generative tools can research targets, craft payloads, and even coordinate large-scale campaigns with minimal human oversight.

- Accelerated Reconnaissance: Reconnaissance means snooping for information before an attack; ML models (machine learning) can scan huge amounts of public and leaked data very quickly. They pull out useful clues and combine them into a clear “map” of targets and exploitable assets (weak systems, credentials, or services). From that map the models suggest the most likely attack routes, so attackers can plan intrusions faster and with less guesswork.

- Targeted Personalization: AI scrapes and profiles data from social media, corporate sites, and other sources to create highly relevant, timely, and personalized lures for phishing and social engineering.

- Adaptive Learning: AI models learn from each interaction and adapt in real time, refining tactics to avoid detection and exploit weaknesses more effectively.

- High-Value Target Identification: AI helps spot high value individuals inside an organization, those with broad access, weak security habits, or useful connections, and tailors attacks specifically to them.

Common Types of AI-Powered Cyberattacks (With Examples)

AI has introduced new levels of automation, precision, and deception to the cyber threat landscape. Attackers now use machine learning (ML) and generative AI to identify vulnerabilities, create adaptive malware, and manipulate human behavior across multiple platforms. Below are the most prevalent forms of AI-powered cyber attacks transforming modern cybercrime.

1. Reconnaissance and Vulnerability Discovery

AI accelerates the discovery phase of an attack by scanning massive codebases, public infrastructure, and exposed services to pinpoint weak points. ML algorithms can analyze system configurations, prioritize vulnerabilities based on exploitability, and suggest optimal attack paths that avoid detection. This automation allows attackers to perform detailed reconnaissance in minutes rather than days.

2. Automated Attack Deployment

AI enables attackers to launch complex operations with minimal human involvement. Generative tools can craft phishing messages, design malware payloads, and coordinate large-scale botnet campaigns simultaneously. These systems can dynamically adjust tactics in real time, altering payloads, communication patterns, and even timing to bypass evolving security defenses.

3. AI-Driven Social Engineering and Phishing

Attackers use AI to identify valuable targets, analyze their digital footprints, and craft personalized communications that mirror their tone or professional context. Generative AI creates convincing emails, chat messages, or even real-time voice interactions designed to extract credentials or financial data. In some cases, AI-powered chatbots simulate customer service agents to trick users into sharing sensitive information or installing malicious files.

4. Deepfake Scams and Voice Impersonation

Deepfake technology allows attackers to synthesize highly realistic videos, images, or voice recordings that imitate trusted figures such as executives, celebrities, or government officials. These forgeries are often used to promote fraudulent investments, authorize fake transactions, or manipulate employees into sharing confidential information. The realism of AI-generated media makes it increasingly difficult for victims to distinguish authentic communication from forgery.

5. AI-Empowered Malware and Botnets

Modern malware and botnets powered by ML can evolve autonomously to avoid signature and behavior-based detection. They can analyze their environment, modify their code, and choose encryption or exfiltration strategies that best fit the target network. Coordinated through AI-driven command systems, these botnets can execute large-scale attacks with adaptive precision while minimizing the risk of early discovery.

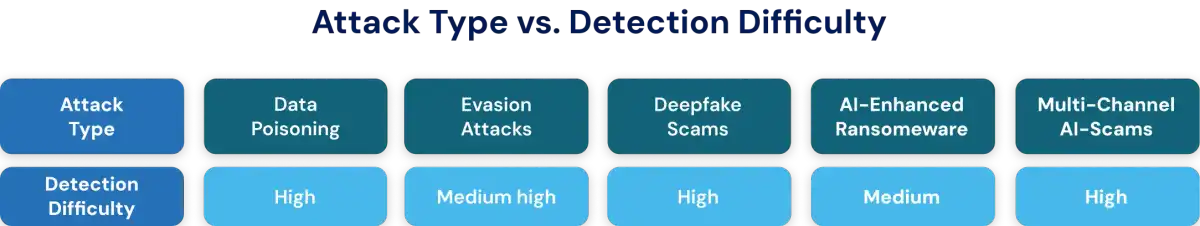

6. Data Poisoning and Evasion Attacks (Adversarial ML)

Adversarial attacks target the AI systems themselves. In data poisoning, attackers inject false or misleading information into training datasets to corrupt model accuracy or behavior. In evasion attacks, subtle modifications to input data such as altered pixels or phrasing trick AI models into making incorrect predictions. Both techniques exploit the model’s reliance on learned patterns, allowing attackers to manipulate outcomes without direct system compromise.

7. AI-Enhanced Ransomware

AI-enabled ransomware uses machine learning to identify high-value assets, detect backup systems, and prioritize encryption targets automatically. These attacks adapt to the victim’s environment, changing encryption techniques or ransom logic to maximize pressure and payout potential. The adaptive nature of AI-driven ransomware makes traditional defense and recovery processes far more challenging.

Real-World Examples of AI Cyberattacks

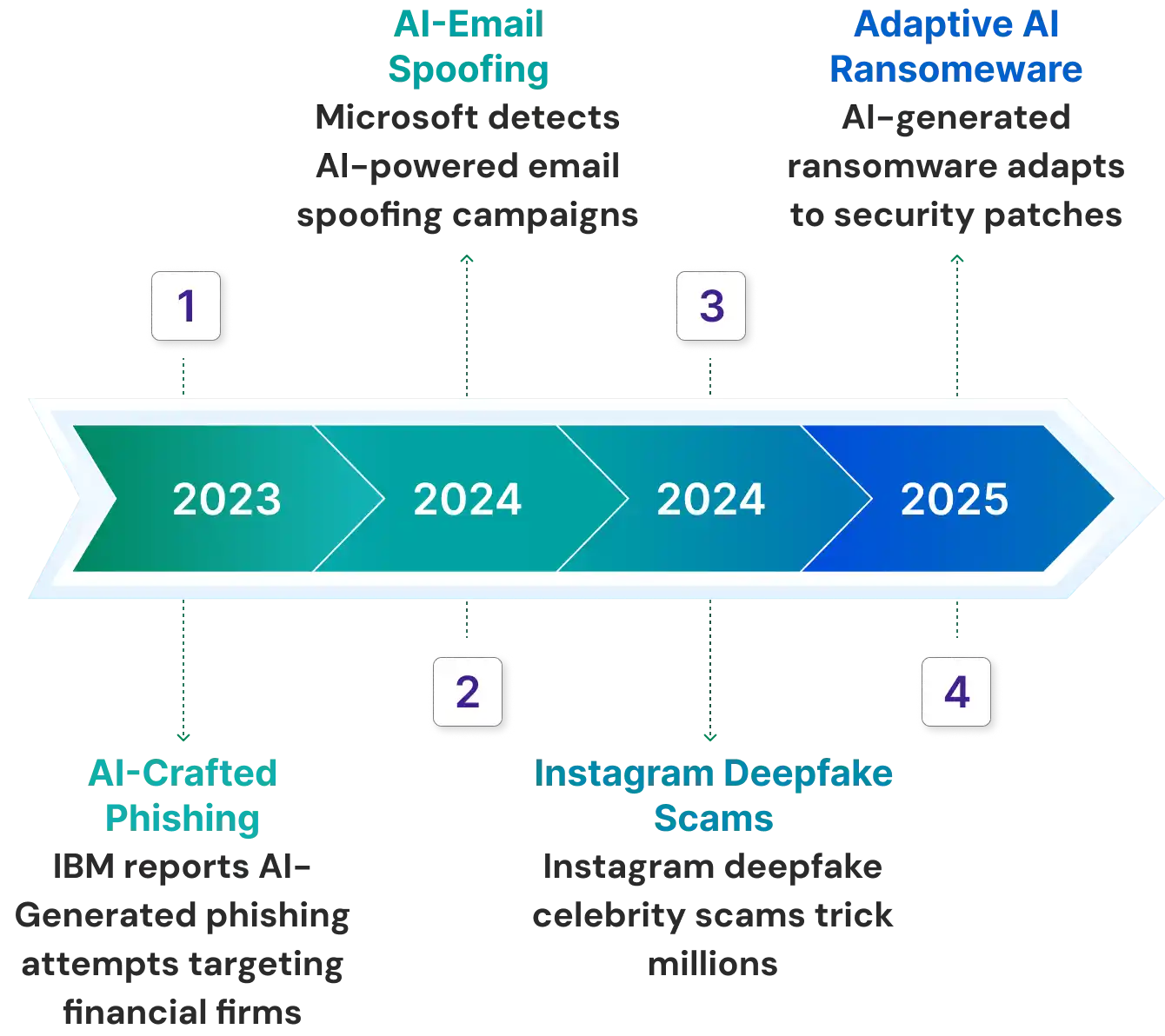

Recent years have already seen several high-impact incidents and campaigns that illustrate how AI and generative techniques are being weaponized in the wild. These real-world cases underscore that AI in cyberattacks is not speculative; it is happening today.

1. Microsoft / Enterprise Phishing Blocks & AI Payloads

Microsoft threat teams have flagged attempts where adversaries used AI to craft phishing payloads that more easily evade detection. These campaigns embed near-realistic personalization, subtle manipulations, or grammar and phrasing tuned to target victims’ style. Defenders report that AI-crafted phishing attempts are making signature and heuristic-based filters less reliable.

2. Deepfake Scam on Instagram & Voice Impersonation

According to a Times of India report, scammers have started using AI-generated deepfake videos and voice impersonation to mimic well-known celebrities and influencers on platforms like Instagram. These fabricated clips often show public figures appearing to endorse fake investment schemes or urge followers to transfer money. Such deceptive content, powered by generative AI, makes it increasingly difficult for users to tell real messages apart from forgeries, especially when circulated widely across social media networks.

3. Deepfake-Driven Financial Fraud & Data Leaks

There are documented cases where AI-generated audio or video recordings have been used to falsify instructions for fund transfers or internal communications. In one example, a fake audio message purportedly from a company executive was used to authorize transfers. In another, AI-generated video was used to mimic a senior official ordering disclosure of classified or internal data. These incidents, according to IBM, show how deepfakes can be weaponized not just for identity fraud but for operational compromise.

4. North Korean Operatives Using GenAI in Remote Work Fraud

According to Cybersecurity Dive, North Korean threat actors have leveraged generative AI to support large-scale remote work fraud schemes. Over 320 cases were detected in a 12-month window, in which GenAI tools were used to fabricate résumés, simulate online personas, generate video interview edges, and automate communications to evade detection. The attackers used AI to sustain credibility throughout the recruitment and employment lifecycle.

5. Scalable, AI-Powered Attacks in 2025

Recent research by Crowdstrike shows how adversaries are increasingly weaponizing AI across all phases of attack, enabling them to scale activity with fewer human operators. One notable example is a campaign that reportedly compromised over 320 companies in a year, embedding generative AI at every stage from reconnaissance to phishing and beyond. The same research observed that interactive, malware-free intrusions grew by 27 percent year over year as adversaries refined stealthier tactics. It also notes that voice phishing is on track to more than double its prior year’s volume, indicating that attackers see voice impersonation as a growing frontier.

Why AI Cyberattacks Are Hard to Detect

AI-powered cyberattacks are reshaping the cybersecurity battlefield by evolving in real time and adapting faster than traditional defenses can respond. Unlike conventional malware that follows predefined commands, AI-driven threats operate autonomously, learn from their environment, and modify their tactics on the fly to bypass even the most advanced detection systems.

1. Autonomous and Self-Evolving Threats

Modern AI malware no longer needs constant human oversight. Once deployed, it can act independently, scanning for vulnerabilities, changing its code structure, and disguising its activity patterns to stay undetected. These self-learning systems can recognize when they are being monitored and immediately adjust their behavior, making signature or rule-based defenses obsolete. A single infected endpoint can become a launchpad for large-scale infiltration as AI-enabled malware replicates itself across networks in minutes, overwhelming incident response teams.

2. Smarter, More Adaptive Attacks

AI has made traditional ransomware and malware campaigns far more intelligent and destructive. Machine learning algorithms help attackers identify and prioritize the most valuable assets, from financial databases to proprietary information, to maximize damage and ransom value. These systems can mimic legitimate user or system behavior, blending into normal network traffic and waiting for ideal attack windows such as off-hours or weekends to strike with minimal detection.

3. Hyper-Personalized Phishing and Social Engineering

The precision of AI-powered data analysis has revolutionized phishing and social engineering attacks. By mining massive datasets including social media posts, communication history, and browsing behavior, AI can craft hyper-personalized messages that feel authentic and relevant. These AI-generated phishing emails or messages might reference real transactions, colleagues, or company events, making them indistinguishable from legitimate correspondence. This level of contextual accuracy dramatically increases the success rate of cyber scams and makes traditional filtering tools ineffective.

4. The AI vs. AI Arms Race

The growing sophistication of AI cyber threats has led to what many experts call an AI vs. AI arms race. As attackers use AI to automate, adapt, and scale their tactics, defenders are being forced to deploy AI-driven security tools of their own such as behavioral analytics, anomaly detection, and predictive threat modeling to detect subtle deviations from normal activity. CrowdStrike and NIST emphasize that static, rule-based systems alone can no longer keep up with adversaries that learn and evolve faster than humans can respond.

How Organizations Can Defend Against AI-Powered Cyber Threats

AI technology has made it easier and faster for cybercriminals to launch sophisticated attacks, lowering the entry barrier for new threat actors while increasing the precision of established ones. Because AI-powered cyberattacks constantly evolve, static defenses and manual monitoring often fail to keep up. To stay secure, organizations must adopt proactive strategies that combine AI-driven defense, Zero Trust frameworks, and adaptive detection mechanisms.

1. Integrate AI into Threat Detection (XDR, SIEM, ML Analytics)

Organizations should deploy cybersecurity platforms that combine extended detection and response (XDR), security information and event management (SIEM), and machine learning analytics. These tools enable continuous monitoring across endpoints, cloud environments, and networks while identifying emerging threats in real time.

Establishing behavioral baselines helps detect deviations that indicate suspicious activity. User and Entity Behavior Analytics (UEBA) systems can identify anomalies in system and user behavior. Additionally, continuous analysis of input and output data for AI and ML systems can help detect adversarial manipulation before it escalates into a larger breach.

2. Enhance Human Verification (Deepfake Detection, Secondary Checks)

Deepfake-based impersonation is becoming one of the most convincing and dangerous forms of cyber deception. Organizations should implement multi-step verification processes for all critical transactions and communications, ensuring that voice or video-based instructions are cross-checked before execution.

Deploying AI-based deepfake detection tools that analyze texture, lighting, and audio inconsistencies can help flag forged media. Secondary confirmation methods, such as verified callbacks or secure internal chat confirmations, provide an additional layer of trust and authenticity.

3. Continuously Assess and Secure AI Systems

AI systems require constant evaluation to stay resilient against evolving threats such as data poisoning, model manipulation, and adversarial AI attacks. Continuous assessments help detect anomalies early, strengthen model integrity, and maintain trust in automated decision-making systems.

- Conduct regular security assessments of AI and ML systems to identify vulnerabilities and address them before exploitation.

- Deploy a comprehensive cybersecurity platform that enables continuous monitoring, intrusion detection, and endpoint protection across all environments.

- Establish behavioral baselines for system and user activity through User and Entity Behavior Analytics (UEBA) to detect deviations that may indicate an attack.

- Integrate behavioral analytics with endpoint, network, and cloud activity for a complete view of environmental threats.

- Implement real-time monitoring and analysis of input and output data in AI/ML systems to prevent data poisoning and model manipulation attempts.

4. Conduct AI-Focused Red Team Exercises

Traditional penetration tests are often not equipped to uncover vulnerabilities unique to AI systems. Conducting red team exercises that simulate AI-powered cyber threats helps security teams identify weaknesses in detection, data flow, and model behavior.

These exercises should include adversarial input testing, model stress analysis, and prompt injection simulations. Regularly updating these exercises ensures the organization’s defenses evolve in step with the rapidly changing threat landscape.

5. Employee Awareness Training (Phishing, Deepfake Recognition)

Human awareness is one of the strongest defenses against AI-driven social engineering. Security training programs should include modules on identifying AI-generated phishing messages, fake audio, and deepfake-based impersonations.

Employees should learn how to verify suspicious requests through approved internal channels before sharing data or credentials. Regular simulations of AI-based attack scenarios can improve detection instincts and reduce human error during real incidents.

6. Building a Future-Ready Cyber Defense

Just as attackers leverage AI to automate and personalize their methods, organizations can use the same technology to strengthen detection and response. AI-driven analytics can process massive data volumes, identify anomalies instantly, and recommend automated remediation. When combined with a Zero Trust architecture and adaptive threat intelligence, these strategies create a dynamic defense system capable of countering the next generation of AI-powered cyber threats.

The Future of Cybersecurity in the Age of AI

As AI continues its rapid advancement, it will not just influence attack techniques but will reshape the entire paradigm of how attacks and defenses interact. We are moving toward a future where autonomous threat agents, ethical AI frameworks, and explainable security systems become central to cybersecurity strategy.

1. Emerging Governance and Ethical Frameworks

To keep pace with AI’s pervasive role in cyber operations, regulatory and governance structures will become more critical. Initiatives such as the NIST AI Risk Management Framework (AI RMF) will guide how organizations build, deploy, and monitor AI systems securely. These frameworks aim to enforce transparency, accountability, and robustness in AI usage, including controls that prevent misuse, bias, or model drift.

In parallel, organizations will need to embed ethics into their defense architecture. That means designing AI systems that are explainable, auditable, and tightly constrained to prevent autonomous AI from crossing the line into behavior that violates privacy, compliance, or safety norms.

2. Rise of Autonomous Threat Response and AI-on-AI Warfare

We will see more attacks that operate independently, not waiting for a human hacker to command them. Large language model-based agents are already evolving toward independent decision-making capabilities in reconnaissance, social engineering, or exploitation tasks. As these autonomous agents proliferate, defending against them will require defense systems that can act with comparable autonomy.

On the flip side, defenders will increasingly rely on AI for AI strategies such as self-healing systems, autonomous triage, and containment by AI. Security platforms will need to evolve from passive alerting to proactive response, adapting defenses dynamically in real time.

3. What Lies Ahead: Challenges and Opportunities

- Scalability and collision risk: As AI-powered cyber attacks scale, the potential for collision or unintended consequences increases. Defense systems must manage risk across vast, interconnected environments.

- Explainability and trust: Organizations will demand AI systems whose decisions can be explained and validated. As complexity grows, black box models will become harder to trust in critical security roles.

- AI model protection: The future will place more emphasis on protecting AI backends, training pipelines, and model integrity. Attackers may attempt to corrupt or steal models directly.

- Convergence with other domains: AI-driven cyber operations will begin overlapping with robotics, IoT, and physical infrastructure. Threats will no longer remain purely digital.

In the coming era, cybersecurity will hinge on aligning detection, governance, and response under an AI-native approach. The defenders who win will be those who can embed trust, explainability, and agility at every layer of their security stack.

Final Thoughts

AI-powered cyberattacks are no longer a future threat, they are here and growing more sophisticated every day. Traditional defenses are not enough, which makes it critical for organizations to adopt AI-driven detection, strengthen Zero Trust strategies, and train employees to recognize evolving scams

At the same time, AI provides defenders with powerful capabilities to predict, detect, and respond faster than ever before. The future of cybersecurity will be shaped by how effectively we use AI, not just as a shield against attackers but as a foundation for building stronger, more resilient security systems.

To see how Tech Prescient, helps enterprises stay ahead of AI-powered threats with intelligent frameworks and automation-driven defenses –

Frequently Asked Questions (FAQs)

1. What is an AI-powered cyberattack?

An AI-powered cyberattack is when attackers use artificial intelligence or machine learning to plan, automate, and adapt their malicious activities. Unlike traditional attacks, these evolve in real time, making them faster and harder to detect. This allows attackers to scale operations with minimal human effort.2. How can AI be used in phishing or social engineering?

Generative AI enables attackers to craft hyper-personalized phishing emails, voice messages, or even videos. These mimic natural human tone and context, making them far more convincing than generic scams. As a result, victims are more likely to trust and engage with malicious content.3. Can AI defend against AI-driven attacks?

Yes, AI-powered defense tools such as anomaly detection and machine learning-based monitoring can quickly identify evolving threats. These systems analyze patterns at scale, spotting anomalies that traditional rule-based tools might miss. By automating response, AI helps reduce attack impact in real time.4. What are examples of AI cyberattacks in 2025?

Recent years have seen deepfake scams tricking employees, AI-coded malware adapting to evade defenses, and adaptive phishing campaigns spreading across channels. These attacks highlight how AI enhances deception and speed. The sophistication makes them more dangerous than older, static attack methods.5. How can organizations reduce the risk of AI attacks?

Companies can strengthen defenses by adopting Zero Trust frameworks and deploying AI-driven detection systems. Running regular adversarial tests helps uncover blind spots before attackers exploit them. Pairing technology with employee awareness training creates a layered security posture.